Search Engine Scraping

JustCBD CBD Gummies - CBD Gummy Bears https://t.co/9pcBX0WXfo @JustCbd pic.twitter.com/7jPEiCqlXz

— Creative Bear Tech (@CreativeBearTec) April 27, 2020

Content

Search Engine Scraper

The ultimate solution on our list is Apify, who provides a great Google Scraper Tool. With a small little bit of configuration, you can scrape Google search outcomes with ease.

Search Engine Harvester

SERP API is a perfect resolution for individuals who need to extract search engine data and not have to fret about information quality and pace. So no matter if you have already got present Google scrapers and just need reliable proxies, or if you want a high-high quality Google Search Results API then Scraper API is a great possibility. GoogleScraper parses Google search engine results (and many different search engines like google and yahoo _) easily and in a quick method. It permits you to extract all discovered hyperlinks and their titles and descriptions programmatically which enables you to course of scraped knowledge further.

Search Engine Harvester Tutorial

The code base can be a lot less advanced with out threading/queueing and complex logging capabilities. Some folks nonetheless would wish to rapidly have a service that lets them scrape some information from Google or another search engine. For this cause, I created the web service scrapeulous.com.

Search Engine Scraping

Women's Clothing and Apparel Email Lists and Mailing Listshttps://t.co/IsftGMEFwv

— Creative Bear Tech (@CreativeBearTec) June 16, 2020

women's dresses, shoes, accessories, nightwear, fashion designers, hats, swimwear, hosiery, tops, activewear, jackets pic.twitter.com/UKbsMKfktM

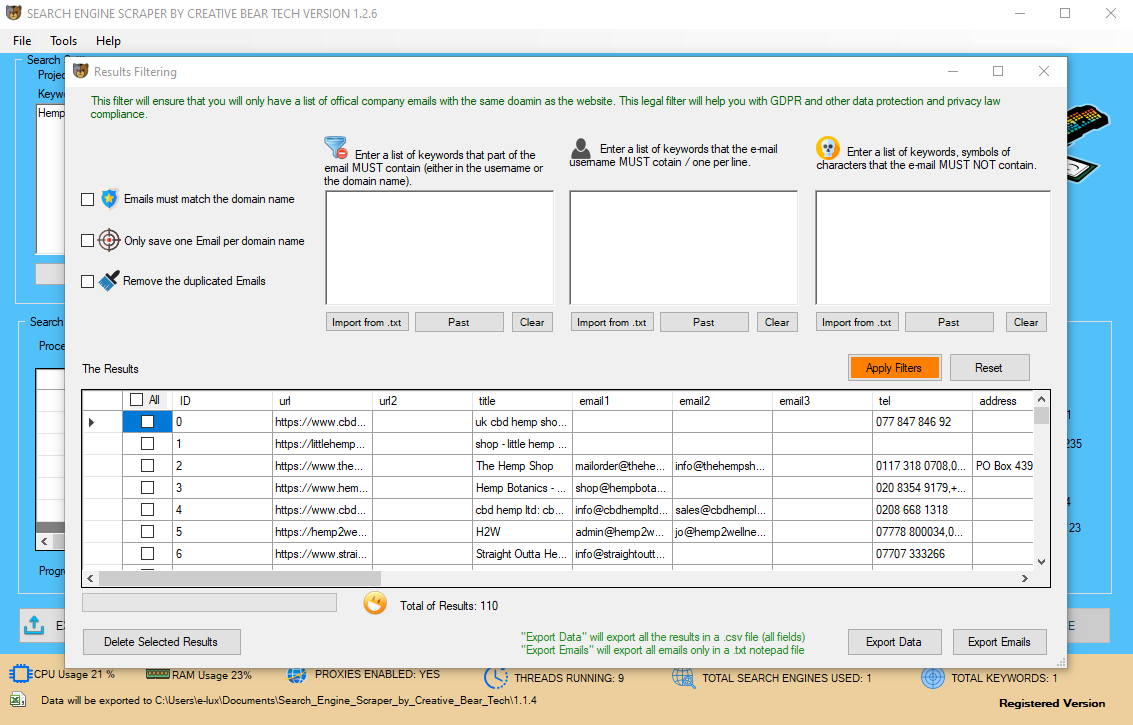

In this guide, we will be giving you a full walkthrough of the way to use Email Extractor and Search Engine Scraper By Creative Bear Tech. This guide shall be divided into sections and can follow in a logic sequence. then use this Google Search Scraper to find out if you're ranked already. Scraper by Data-Miner.io will get knowledge out of net pages and into Microsoft Excel spreadsheets or CSV file codecs. Content left, proper and middle, however nothing tangible to level you in the appropriate direction. Google made itself incalculably useful when it become the cartographer of the internet, and we just can’t thank them sufficient.

Methods Of Scraping Google, Bing Or Yahoo

A chilled out evening at our head offices in Wapping with quality CBD coconut tinctures and CBD gummies from JustCBD @justcbdstore @justcbd @justcbd_wholesale https://t.co/s1tfvS5e9y#cbd #cannabinoid #hemp #london pic.twitter.com/LaEB7wM4Vg

— Creative Bear Tech (@CreativeBearTec) January 25, 2020

Proxies are available here as a result of they hide your original IP address, and may be rotated simply. They must be rotated as a result of the IP address is the indicator that a search engine will recognize because the scraper. It can’t be your actual IP tackle because you’d get in trouble with your ISP. If it’s a proxy IP tackle it might finally get blocked, after which you would swap it out for one more one. Without search engines like google and yahoo, the internet could be one big pile of mush. It’s known as Google Docs and since it will be fetching Google search pages from within Google’s personal network, the scraping requests are much less prone to get blocked. Scrape all the urls for a listing of corporations in my google sheet underneath “Company Update” for the day. In respect of this second question, let us say I even have the corporate codes as below , , , , , , , , . (The company codes are just like symbols utilized by NASDAQ like AAPL for Apple Inc).

Vitamins and Supplements Manufacturer, Wholesaler and Retailer B2B Marketing Datahttps://t.co/gfsBZQIQbX

— Creative Bear Tech (@CreativeBearTec) June 16, 2020

This B2B database contains business contact details of practically all vitamins and food supplements manufacturers, wholesalers and retailers in the world. pic.twitter.com/FB3af8n0jy

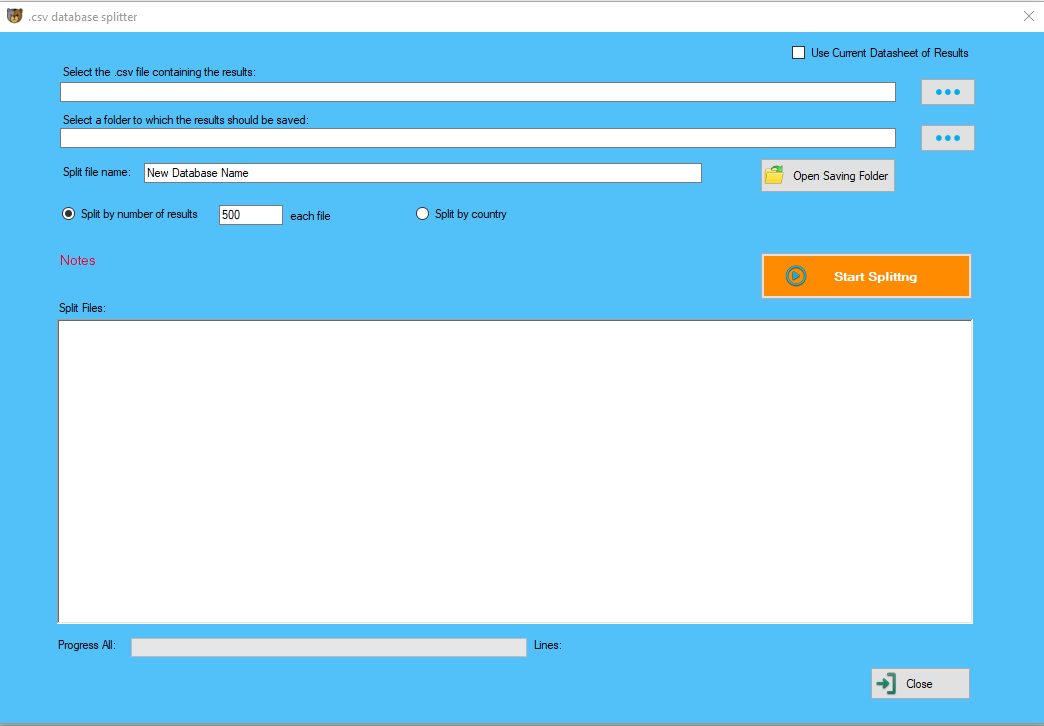

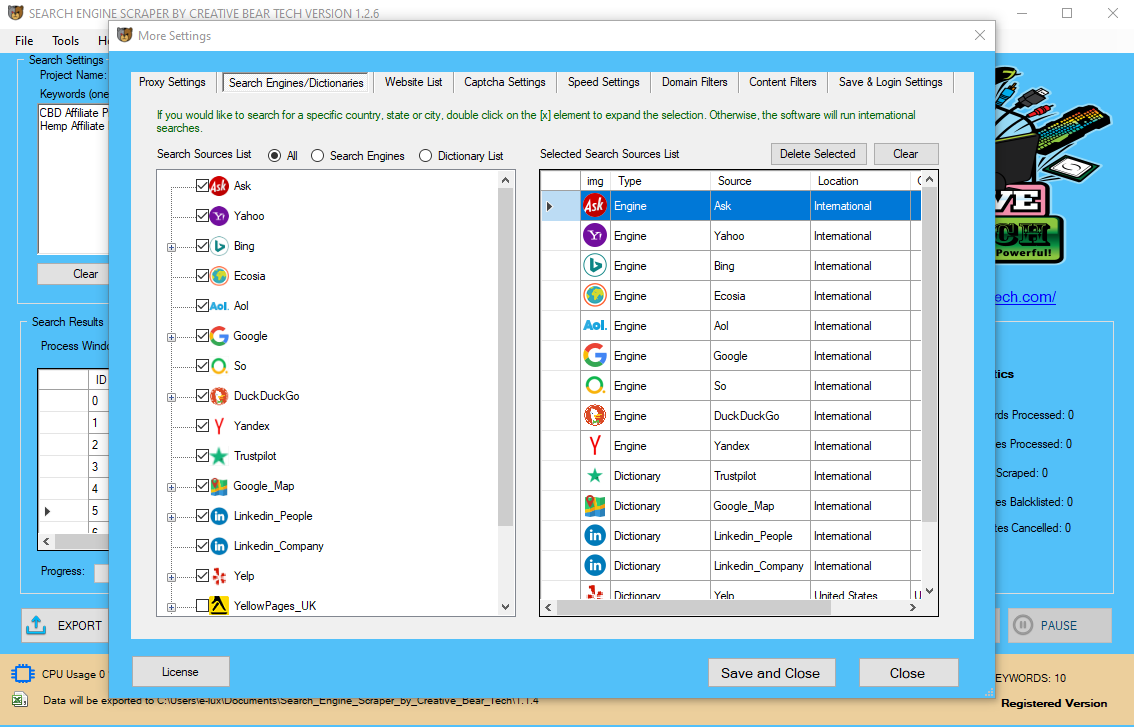

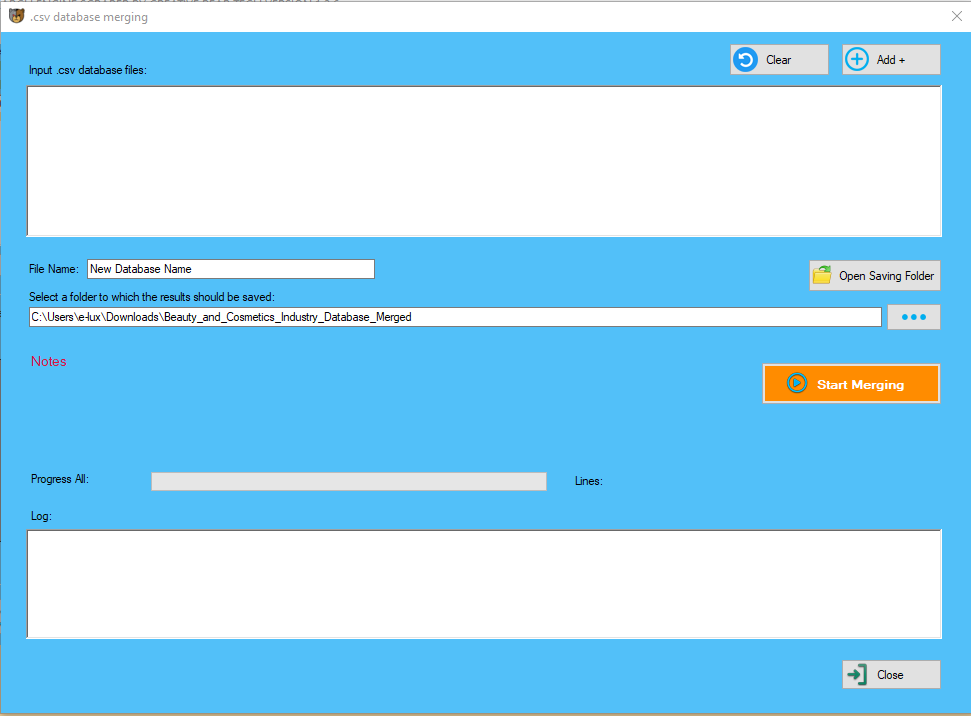

Therefore it's nearly impossible to simulate such a browser manually with HTTP requests. This means Google has numerous methods to detect anomalies and inconsistencies in the shopping usage. Alone the dynamic nature of Javascript makes it unimaginable to scrape undetected. It supports a wide range of different search engines like google and yahoo and is rather more environment friendly than GoogleScraper. Table seize or Scraper data from any web site such as Zoominfo.cm or Data.com or emails addresses. If you're a recruiter or in gross sales or just thinking about price comparability and competitor evaluation this app is for you. For instance, in a single recent scrape I harvested 7 million URLs from Bing in a couple hours. One thing to remember is that all of those search engines like google and yahoo are non-public companies. There are many more, however these seven are one of the best of the most effective and must be your first alternative when on the lookout for an answer to your SERP knowledge needs. A module to scrape and extract hyperlinks, titles and descriptions from various search engines. Chrome has around eight millions line of code and firefox even 10 LOC. Huge corporations make investments a lot of money to push technology forward (HTML5, CSS3, new requirements) and every browser has a novel behaviour. The Google Search Scraper from right here already incorporates code to detect, detection and abort in that case. It is the pinnacle cartographer and may, with the best methods, yield the most fruitful scrapes around. I’ll get into extra of the terminology in the instance for Google, and then go into the other search engines like google. To scrape a search engine is to harvest all the data on it. For scraping, Scrapy appears to be a preferred selection and a webapp referred to as ScraperWiki may be very attention-grabbing and there may be another project extract it's library for offline/local usage. Mechanize was introduced up fairly several times in numerous discussions too. Some programmers who create scraper websites could purchase a lately expired area name to reuse its SEO energy in Google. Whole companies focus on understanding all[quotation needed] expired domains and utilising them for his or her historic ranking capability exist. The extra threads you have, the more open connections to the search engine and the faster your scrape. This might sound nice, however it also leaves your proxy IP very vulnerable to getting banned or blocked. Scrape is an unpleasant word for crawl, suck, draw out of, or harvest (all of that are ugly phrases in and of themselves). Doing so will permit SEOs to utilize the already-established backlinks to the area name. Some spammers might attempt to match the subject of the expired website or copy the existing content from the Internet Archive to maintain the authenticity of the site in order that the backlinks do not drop. If I recall accurately that limit was at 2.500 requests/day. This will scrape with three browser occasion every having their very own IP address. Unfortunately, it is at present not possible to scrape with different proxies per tab. Boasting a 100% success rate and a straightforward to make use of API, this solution is great for anybody who must be assured fast excessive-high quality search engine data. However, with prices beginning at $50 for 50,000 Google searches utilizing SERP API as your main supply of SERP data could be costly if you need large volumes of data. It may be a simple WordPress weblog with a search characteristic that you simply need to harvest all of the URL’s from on a specific keyword or variety of key phrases, or a major search engine like Google, Bing or Yahoo. There are highly effective command-line tools, curl and wget for example, that you should use to download Google search result pages. The HTML pages can then be parsed using Python’s Beautiful Soup library or the Simple HTML DOM parser of PHP but these methods are too technical and contain coding. The other problem is that Google could be very likely to temporarily block your IP handle do you have to send them a few automated scraping requests in fast succession. Scraping search engines like google and yahoo is essential for some businesses however some search engines do not permit automated access to their search results. As a outcome, the service of a search engine scraper may be wanted. The thought right here is that the app will continuously monitor the proxies by eradicating non-working ones and scraping and adding new ones once in a while to make sure that the app has enough proxies to run on always. Perhaps you've your personal list of internet sites that you've got created utilizing Scrapebox or some other sort of software and you want to parse them for contact details. You will need to go to “More Settings” on the principle GUI and navigate to the tab titled “Website List“. I advocate to separate your master list of internet sites into files of one hundred web sites per file. The reason why it is important to cut up up bigger information is to allow the software to run at a number of threads and course of all the websites much quicker. When you've bought your copy of the Email Extractor and Search Engine Scraper by Creative Bear Tech, you need to have acquired a username and a licence key. This licence key will permit you to run the software program on one machine. Your copy of the software program might be tied to your MAC handle.

- You should actually solely be using the “integrated internet browser” if you are utilizing a VPN similar to Nord VPN or Hide my Ass VPN (HMA VPN).

- The “Delay Request in Milliseconds” helps to maintain the scraping exercise relatively “human” and helps to keep away from IP bans.

- This means how many keywords you wish to course of at the similar time per web site/source.

- So, the software would simultaneously scrape Google for two key phrases, Bing for 2 key phrases and Google Maps for 2 key phrases.

- Then you must choose the number of “threads per scraper“.

- For instance, if I choose 3 sub scrapers and a couple of threads per scraper, this would imply that the software program would scrape Google, Bing and Google Maps at 2 keywords per website.

For example, an expired website a couple of photographer may be re-registered to create a site about pictures suggestions or use the area name of their private blog network to power their own pictures website. Depending upon the objective of a scraper, the strategies by which websites are targeted differ. For example, websites with large quantities of content material such as airways, shopper electronics, department stores, and so forth. may be routinely targeted by their competitors simply to stay abreast of pricing data. Essentially, the very cause that publishers originally wished to be listed in SERPs is being circumvented. The last time I checked out it I was using an API to search by way of Google. Still confused why this one works however whether it is wrapped inside operate then it won't work anymore. Btw, the scraper appears a bit awkward cause I used the same for loop twice in my scraper in order that It cannot skip the content of first web page. Another type of scraper will pull snippets and textual content from websites that rank high for keywords they've focused. This way they hope to rank extremely in the search engine outcomes pages (SERPs), piggybacking on the original page's page rank. This was when content penalties were launched, used primarily to eliminate scraper websites that would rip content material from one website and put it on their very own. Because GoogleScraper helps many search engines like google and the HTML and Javascript of these Search Providers modifications regularly, it's often the case that GoogleScraper ceases to perform for some search engine. When growing a search engine scraper there are several existing instruments and libraries out there that can both be used, prolonged or simply analyzed to study from. Ruby on Rails as well as Python are additionally frequently used to automated scraping jobs. For highest efficiency C++ DOM parsers must be considered. When developing a scraper for a search engine virtually any programming language can be utilized however relying on efficiency necessities some languages will be favorable. An example of an open supply scraping software which makes use of the above talked about strategies is GoogleScraper. Prior to Google's update to its search algorithm known as Panda, a kind of scraper website known as an auto weblog was quite common amongst black hat marketers who used a technique generally known as spamdexing. Made for AdSense websites are thought of search engine spam that dilute the search outcomes with much less-than-satisfactory search outcomes. The scraped content material is redundant to that which might be shown by the search engine underneath normal circumstances, had no MFA web site been discovered within the listings. The Simple SERP Scraper does exactly what it says - scrapes Google search engine results pages. Google does link to Wikipedia in its excerpt, which is consistent with how its different search results work and customarily on the best side of the law, when these items have been challenged in numerous places. And by scraper site, Google’s really speaking about sites that wholescale copy all of someone’s content, somewhat than aiming for a fair use excerpt. Barker did a search for what's a scraper web site, which introduced up Google’s personal net definition on the prime of the outcomes. And that definition technically outranks the unique supply of the content material, Wikipedia, which comes right below. Some scraper websites hyperlink to different websites to enhance their search engine ranking by way of a non-public blog community. are you able to clarify the way to extract some photographs URL from a google search based on a keyword? I actually have a spreadsheet with some merchandise titles and need to put 1 o 2 photographs URL for every product in the cells beside the title cells. So there’s no method for standard formulas to take care of pagination and scrape information from multiple pages. Potentially you could modify the URL every time, depending on how it was set up, so that you could change the pagination number every time, e.g. You can discover a listing of Google supported language codes here. Search engines can't easily be tricked by changing to a different IP, whereas using proxies is a vital part in successful scraping. The range and abusive history of an IP is necessary as well. The customized scraper comes with roughly 30 search engines like google and yahoo already skilled, so to get started you simply have to plug in your keywords and begin it operating or use the included Keyword Scraper. There’s even an engine for YouTube to reap YouTube video URL’s and Alexa Topsites to harvest domains with the best traffic rankings. ScrapeBox has a customized search engine scraper which can be trained to reap URL’s from virtually any web site that has a search characteristic. Then, you will want to uncheck the box “Read-solely” and click on on “Apply”. This motion needs to be carried out to be able to give the website scraper full writing permissions. () But even this incident didn't lead to a court case. Network and IP limitations are as nicely part of the scraping defense systems. This is a specific form of display screen scraping or web scraping devoted to search engines like google and yahoo only. Training new engines is pretty simple, many individuals are capable of prepare new engines just by taking a look at how the 30 included search engines like google are setup. We have a Tutorial Video or our help employees might help you train particular engines you want. You may even export engine information to share with pals or work schools who personal ScrapeBox too. If you ever need to extract results data from Google search, there’s a free device from Google itself that is excellent for the job.

For example, an expired website a couple of photographer may be re-registered to create a site about pictures suggestions or use the area name of their private blog network to power their own pictures website. Depending upon the objective of a scraper, the strategies by which websites are targeted differ. For example, websites with large quantities of content material such as airways, shopper electronics, department stores, and so forth. may be routinely targeted by their competitors simply to stay abreast of pricing data. Essentially, the very cause that publishers originally wished to be listed in SERPs is being circumvented. The last time I checked out it I was using an API to search by way of Google. Still confused why this one works however whether it is wrapped inside operate then it won't work anymore. Btw, the scraper appears a bit awkward cause I used the same for loop twice in my scraper in order that It cannot skip the content of first web page. Another type of scraper will pull snippets and textual content from websites that rank high for keywords they've focused. This way they hope to rank extremely in the search engine outcomes pages (SERPs), piggybacking on the original page's page rank. This was when content penalties were launched, used primarily to eliminate scraper websites that would rip content material from one website and put it on their very own. Because GoogleScraper helps many search engines like google and the HTML and Javascript of these Search Providers modifications regularly, it's often the case that GoogleScraper ceases to perform for some search engine. When growing a search engine scraper there are several existing instruments and libraries out there that can both be used, prolonged or simply analyzed to study from. Ruby on Rails as well as Python are additionally frequently used to automated scraping jobs. For highest efficiency C++ DOM parsers must be considered. When developing a scraper for a search engine virtually any programming language can be utilized however relying on efficiency necessities some languages will be favorable. An example of an open supply scraping software which makes use of the above talked about strategies is GoogleScraper. Prior to Google's update to its search algorithm known as Panda, a kind of scraper website known as an auto weblog was quite common amongst black hat marketers who used a technique generally known as spamdexing. Made for AdSense websites are thought of search engine spam that dilute the search outcomes with much less-than-satisfactory search outcomes. The scraped content material is redundant to that which might be shown by the search engine underneath normal circumstances, had no MFA web site been discovered within the listings. The Simple SERP Scraper does exactly what it says - scrapes Google search engine results pages. Google does link to Wikipedia in its excerpt, which is consistent with how its different search results work and customarily on the best side of the law, when these items have been challenged in numerous places. And by scraper site, Google’s really speaking about sites that wholescale copy all of someone’s content, somewhat than aiming for a fair use excerpt. Barker did a search for what's a scraper web site, which introduced up Google’s personal net definition on the prime of the outcomes. And that definition technically outranks the unique supply of the content material, Wikipedia, which comes right below. Some scraper websites hyperlink to different websites to enhance their search engine ranking by way of a non-public blog community. are you able to clarify the way to extract some photographs URL from a google search based on a keyword? I actually have a spreadsheet with some merchandise titles and need to put 1 o 2 photographs URL for every product in the cells beside the title cells. So there’s no method for standard formulas to take care of pagination and scrape information from multiple pages. Potentially you could modify the URL every time, depending on how it was set up, so that you could change the pagination number every time, e.g. You can discover a listing of Google supported language codes here. Search engines can't easily be tricked by changing to a different IP, whereas using proxies is a vital part in successful scraping. The range and abusive history of an IP is necessary as well. The customized scraper comes with roughly 30 search engines like google and yahoo already skilled, so to get started you simply have to plug in your keywords and begin it operating or use the included Keyword Scraper. There’s even an engine for YouTube to reap YouTube video URL’s and Alexa Topsites to harvest domains with the best traffic rankings. ScrapeBox has a customized search engine scraper which can be trained to reap URL’s from virtually any web site that has a search characteristic. Then, you will want to uncheck the box “Read-solely” and click on on “Apply”. This motion needs to be carried out to be able to give the website scraper full writing permissions. () But even this incident didn't lead to a court case. Network and IP limitations are as nicely part of the scraping defense systems. This is a specific form of display screen scraping or web scraping devoted to search engines like google and yahoo only. Training new engines is pretty simple, many individuals are capable of prepare new engines just by taking a look at how the 30 included search engines like google are setup. We have a Tutorial Video or our help employees might help you train particular engines you want. You may even export engine information to share with pals or work schools who personal ScrapeBox too. If you ever need to extract results data from Google search, there’s a free device from Google itself that is excellent for the job.

Exercising and Running Outside during Covid-19 (Coronavirus) Lockdown with CBD Oil Tinctures https://t.co/ZcOGpdHQa0 @JustCbd pic.twitter.com/emZMsrbrCk

— Creative Bear Tech (@CreativeBearTec) May 14, 2020

They don’t launch “best of scraping” guides for users, and so they definitely don’t submit what their guidelines are. Scraping is a continuing trial and error course of, so please take my suggestions with a grain of salt. When you set it to quick timeouts the software will ping the search engine every single second (or each 10 seconds, and so forth.). The idea for this course of came from a tweet I shared round utilizing Screaming Frog to extract the related searches that Google displays for key phrases. The primary thing that any web optimization-company targets with any customer are key phrases rankings. You may have your personal CRM or application that you simply use together with your staff to track prospects’ KPIs, so you should get Google search rankings. If you are interested in our search engine scraping service and wish to know more about it works, don’t hesitate to contact us today. First on our listing is Scraper API as it offers the most effective functionality for the lowest value versus everybody else on this list. That is why in this information, we’re going to interrupt down the 7 best Google proxy, API and scraping tools that make getting the SERP knowledge you want effortless. It shouldn't be a problem to scrape 10'000 key phrases in 2 hours. If you might be really loopy, set the maximal browsers within the config somewhat bit larger (within the high of the script file).

View our video tutorial exhibiting the Search Engine Scraper in motion. This function is included with ScrapeBox, and can also be compatible with our Automator Plugin. To get started, open this Google sheet and duplicate Twitter Email Scraper it to your Google Drive. Enter the search query within the yellow cell and it'll immediately fetch the Google search outcomes for your keywords. Let’s run by way of the method of scraping some data from search engine results pages. You will definitely want a Google scraping service to research keyword positions or fetch another data from search engine outcomes pages. “[Icon of a Magic Wand] [verify field] Automatically generate key phrases by getting related keyword searches from the search engines”. It may be very straightforward to use - simply add in your search prospecting queries (one per line) and scrape Google's outcomes. The settings let you determine the locality and how many results you need to pull again. I've been using it (the search engine scraper and the recommend one) in multiple project. Once in a year or so it stops working due to adjustments of Google and is normally up to date inside a few days. In order to introduce concurrency into this library, it is essential to define the scraping model. However scraping is a unclean business and it usually takes me a lot of time to find failing selectors or lacking JS logic. So if any search engine doesn't yield the outcomes of your liking, please create a static take a look at case just like this static test of google that fails. This node module lets you scrape search engines like google concurrently with completely different proxies. We’ve simply talked via 7 of the top APIs and proxy options for Google search engine outcomes. With plans starting from $29 for 250,000 Google pages, to Enterprise Plans for hundreds of millions of Google pages per 30 days, Scraper API has an option for every finances measurement. Scraper API is a software designed for builders who want to scrape the net at scale with out having to worry about getting blocked or banned. It handles proxies, person agents, CAPTCHAs and anti-bots so you don’t should. Simply ship a URL to their API endpoint or their proxy port and they deal with the remainder. Making it a fantastic option for businesses who want to affordably mine Google SERP outcomes for web optimization and market research insights. Compunect scraping sourcecode - A vary of well-known open supply PHP scraping scripts including a regularly maintained Google Search scraper for scraping commercials and organic resultpages. The largest public recognized incident of a search engine being scraped happened in 2011 when Microsoft was caught scraping unknown key phrases from Google for their very own, rather new Bing service. Sometimes, the website scraper will attempt to save a file from a web site onto your native disk. Our devs are looking for a solution to get the website scraper to automatically close the windows. It is useful to export just the emails should you plan to use the scraped knowledge only for newsletters and emails. On the main GUI, on the top left hand aspect, just under “Search Settings”, you will note a area called “Project Name“. This name will be used to create a folder the place your scraped knowledge shall be saved and will also be used because the name of the file. Make sure that your list of internet sites is saved regionally in a .txt notepad file with one url per line (no separators). Select your web site record supply by specifying the situation of the file.  This framework controls browsers over the DevTools Protocol and makes it onerous for Google to detect that the browser is automated. The quality of IPs, strategies of scraping, keywords requested and language/country requested can tremendously affect the possible maximum fee. The more keywords a person must scrape and the smaller the time for the job the harder scraping shall be and the more Lead Generation Software developed a scraping script or device needs to be. To scrape a search engine efficiently the 2 main factors are time and amount. Google is the by far largest search engine with most customers in numbers as well as most revenue in creative advertisements, this makes Google the most important search engine to scrape for search engine optimization related firms. The process of getting into a website and extracting data in an automatic fashion is also typically referred to as "crawling". Search engines like Google, Bing or Yahoo get nearly all their information from automated crawling bots. Search engine scraping is the process of harvesting URLs, descriptions, or other data from search engines such as Google, Bing or Yahoo. For instance, if I am scraping cryptocurrency and blockchain information, I would have a project name along the lines of “Cryptocurrency and Blockchain Database“. Extract the software files utilizing .rar extraction software program. Then go to folder “1.1.1” right click on it and choose “Properties”. This apply was frowned upon notably because, usually, these scraper web sites would rank higher for that content than the unique site or author. Since that time, it has been made clear that if you have duplicate content - essentially, when you're copying some other source - then you are going to feel Google's wrath.

This framework controls browsers over the DevTools Protocol and makes it onerous for Google to detect that the browser is automated. The quality of IPs, strategies of scraping, keywords requested and language/country requested can tremendously affect the possible maximum fee. The more keywords a person must scrape and the smaller the time for the job the harder scraping shall be and the more Lead Generation Software developed a scraping script or device needs to be. To scrape a search engine efficiently the 2 main factors are time and amount. Google is the by far largest search engine with most customers in numbers as well as most revenue in creative advertisements, this makes Google the most important search engine to scrape for search engine optimization related firms. The process of getting into a website and extracting data in an automatic fashion is also typically referred to as "crawling". Search engines like Google, Bing or Yahoo get nearly all their information from automated crawling bots. Search engine scraping is the process of harvesting URLs, descriptions, or other data from search engines such as Google, Bing or Yahoo. For instance, if I am scraping cryptocurrency and blockchain information, I would have a project name along the lines of “Cryptocurrency and Blockchain Database“. Extract the software files utilizing .rar extraction software program. Then go to folder “1.1.1” right click on it and choose “Properties”. This apply was frowned upon notably because, usually, these scraper web sites would rank higher for that content than the unique site or author. Since that time, it has been made clear that if you have duplicate content - essentially, when you're copying some other source - then you are going to feel Google's wrath.